As an experienced SEO expert, you are well aware of the implications technical issues can have on your website’s search engine optimization. Even the smallest technical issue can slow down Google’s discovery of your web pages, affecting your search rankings and overall search visibility.

In this blog post, we’ll discuss some common technical SEO issues that are frequently misconfigured or overlooked by web developers or digital marketers. We’ll cover everything from broken links to duplicate content issues and provide actionable tips on fixing them.

We’ll also explore why poor user experience (UX) can negatively impact your site’s SEO strategy and what you can do to create an optimized UX for both users and search engines alike. Additionally, we’ll dive into on-page optimization techniques such as meta descriptions, title tags, canonical tags, crawl directives, and more.

Then, by the end of this post, you will be well-versed in how technical issues can impair your site’s SEO results and what measures to take to resolve them. You will also learn practical solutions for fixing these problems so that Google crawlers can consume all of your content marketing efforts without any hiccups.

So let’s get started!

Common Technical SEO Issues and How to Fix Them

#1. No HTTPS Security

No HTTPS Security is a major technical SEO issue that can seriously affect any website. It’s important to understand the risks and take steps to ensure your site has the proper security measures in place.

What Is HTTPS?

HTTPS stands for Hypertext Transfer Protocol Secure, and it is an encryption protocol used by websites to protect data being sent between them and their visitors.

And when a website uses HTTPS, all communication between the server and browser is encrypted so that no one else can intercept or read it, making it much more difficult for hackers or other malicious actors to access sensitive information, such as passwords, credit card numbers, etc.

Why Is No HTTPS Security A Problem?

Without secure connections enabled on your website, anyone who visits could risk having their personal information stolen or misused in some way. Additionally, Google has stated that they will give preference in search engine rankings to sites with SSL/TLS certificates installed (the technology behind HTTPS).

Not having this certificate could result in lower visibility on search engines which could lead to fewer visitors overall. Finally, not using secure connections may also cause browsers like Chrome and Firefox to display warnings about visiting insecure sites, making potential customers wary of doing business with you online.

How To Fix No HTTPS Security Issues?

Next Up, while it can be difficult to determine why a website is not indexed correctly, understanding the primary reasons behind this issue will make it easier to resolve.

So, let’s have a brief look at it.

More Resources :

#2. The site Isn’t Indexed Correctly

When it comes to technical SEO, one of the most common issues is when a website isn’t indexed correctly. This can be caused by a number of factors, from incorrect robots.txt settings to broken links and more. And any webmaster or SEO professional needs to understand how this issue can arise and what steps need to be taken to fix it.

First off, if you see that your site isn’t being indexed correctly, then you should check your robots.txt file first and foremost. This file contains instructions for search engine crawlers on which pages they should index and which ones they shouldn’t.

If there are any errors here, then it could prevent certain pages from being indexed at all or, even worse, cause them to be blocked entirely.

Another potential cause of an incorrectly indexed website is broken links within the page structure itself – these can often lead crawlers down dead-end paths where they won’t find anything useful (or, worse still, find something malicious).

To avoid this issue, make sure all internal links are working properly before submitting your site for indexing – use tools like Google Search Console or Screaming Frog SEO Spider to help identify any broken links quickly and easily.

Finally, if you’ve checked both the robots file and link structure but still aren’t seeing results, then there may be an issue with the way your content is structured on each page – make sure that headings are clearly marked up using HTML tags such as H1s, H2s, etc., as well as ensure meta descriptions accurately reflect what each page is about so that search engines know exactly what content exists on each page.

All in all, while not always easy to diagnose why a website isn’t being indexed correctly, understanding some of the key causes behind this problem will go a long way toward helping resolve it quickly.

#3. No XML Sitemaps

XML sitemaps are an essential part of any website’s technical SEO. They provide search engines with a comprehensive list of all the pages on your site, allowing them to crawl and index more efficiently.

Unfortunately, many websites don’t have XML sitemaps set up correctly – or at all. This can be a major issue for SEO performance, as it prevents search engine crawlers from finding important content on your site. But don’t worry; we’ve got you!

Hereunder we’ve mentioned ways you can fix this problem.

The first step in fixing this problem is to create an XML sitemap if you don’t already have one.

You can do this manually by writing out each page URL in the correct format or using an automated tool like Yoast SEO which will generate a complete sitemap for you.

Once you have created your XML sitemap, make sure that it is accessible from the root domain (e.g.,

www.example.comsitemap_index).

Once your XML sitemap is live, you should also check that it contains all of the relevant URLs on your website and none of the irrelevant ones (such as duplicate pages or broken links).

If there are any missing URLs, then these need to be added manually before submitting them to Google Search Console so that they can be indexed properly by search engines.

It is essential to consistently update and resubmit your XML Sitemaps when any changes take place onsite, such as the publication of new content or updates to existing content. This allows search engine crawlers to be aware of what is happening with your website’s structure and contents without guessing anything. So, make sure that any new pages added to your website are included in the XML Sitemap, too – otherwise, they won’t get crawled and indexed either.

Doing this helps keep their indexes up-to-date with fresh information about what’s happening within your site, something which could really pay off in terms of improved visibility over time.

#4. Duplicate Content Issues

Duplicate content issues are a common technical SEO issue that can cause confusion for search engine crawlers and prevent them from properly indexing your website. This can lead to decreased visibility in the SERPs, as well as other problems, such as lower click-through rates and reduced organic traffic.

The most common type of duplicate content is when two or more web pages have identical or very similar content. This could be due to multiple versions of the same page being accessible through different URLs or because you’ve copied text from another source without citing it properly.

And it’s important to identify any instances of duplicate content on your site so that you can take steps to fix it.

How to Fix It?

One way to do this is by using a tool like Copyscape, which will scan your website for plagiarism and help you find any potential cases of duplicate content. Once you’ve identified the problem areas, there are several ways to address them.

It’s also important not to overlook internal links when addressing duplicate content issues – make sure they all point towards one version of each page instead of spreading out across multiple versions with slightly different URLs (this is known as “canonicalization”).

Doing this will ensure search engines know which version should be indexed and ranked in their results pages. Otherwise, they may split authority between multiple copies, resulting in not receiving full credit for its ranking power.

Finally, keep an eye out for external sources linking back to outdated versions of your pages – these need updating too. Also, if someone has linked back directly to an old URL, then contact them, asking politely if they would update their link – often times people don’t realize what they’re doing wrong until it’s pointed out.

#5. Slow Page Load Times

Page load times are a major factor in any website’s user experience. Slow-loading pages can be detrimental to the user experience, leading to visitors abandoning your website before they can look around and causing higher bounce rates and decreased visibility on search engine results pages. According to Portent, the highest ecommerce conversion rates occur on sites with a load time between 0-2 seconds.

So, to ensure visitors stay on your site and search engine rankings remain high, you can take steps to optimize page loading speed. Here’s what you’re supposed to do.

Optimizing images is an important step for improving page load times. This includes compressing images and reducing their file size so that they don’t take as long to download when someone visits your website.

Minifying code is also helpful; this involves removing unnecessary characters from HTML, CSS, and JavaScript files without changing their functionality so that pages take less time to render.

Enabling browser caching can assist in cutting down the data needed by users when they visit a page on your website, as some content is able to be stored locally instead of being reloaded each time.

Finally, reducing redirects is essential because each additional redirection increases the amount of time required for a web page to load due to extra server requests involved with them fully.

In conclusion, slow page loading speeds can impede website operation and should not go unnoticed. Ultimately, various strategies can be employed to enhance the visitor experience of a website by tackling slow page load times.

#6. Missing or Incorrect Robots.Txt

Missing or Incorrect Robots.txt is a common technical SEO issue that can have a major impact on the success of your website.

A robots.txt file is an important part of any website’s architecture, as it helps search engine crawlers understand which parts of the site should be indexed and which should not.

If this file is missing or incorrect, it can lead to problems with indexing and ranking in search engines, resulting in decreased visibility for your site.

What Is Robots.txt?

Robots.txt is a text file located at the root level of your domain that tells web crawlers what content they are allowed to access on your website and what content they should ignore when crawling through pages on your site.

It’s important to note that while some search engines may honor these instructions, others may not – so you need to make sure you have all bases covered when creating this file for maximum effectiveness.

Why Is It Important?

Having an accurate robots.txt file ensures that only relevant pages are being crawled by search engine bots, meaning more efficient indexing and better rankings for those pages in SERPs (Search Engine Results Pages).

Additionally, having this document set up correctly will help prevent duplicate content issues from occurring due to multiple versions of the same page being indexed by different bots – something which could potentially hurt both user experience and SEO performance if left unchecked.

How To Fix Missing Or Incorrect Robots Files?

If you find out that either there isn’t a robot. txt present or one exists but contains errors, then firstly create one using tools like Google Search Console or Yoast SEO plugin if available for WordPress sites – these provide easy-to-use interfaces where users can quickly generate their own custom files without needing any coding knowledge whatsoever.

Once created/updated, make sure all necessary changes have been made before uploading back onto the server via an FTP client, such as FileZilla, etc.

Finally, check whether everything looks good using online validators like Robots.txt checker and Screaming Frog Spider Toolkit, respectively – once done, then sit back and relax, knowing the job well done.

#7. Broken Links

Broken links are among the most common technical SEO issues that can hurt your website’s ranking in search engine results pages (SERPs). Links that no longer lead to an active page or have been incorrectly typed are known as broken links.

To fix this issue, you need to regularly check for broken links using tools like Google Search Console and Screaming Frog.

Google Search Console

Google Search Console is a free tool that enables webmasters to track their website’s visibility and performance in SERPs, as well as identify indexing errors and broken links. It also provides information about indexing errors and broken links.

The “Crawl Errors” report will show any URLs with 404 errors – meaning they point to non-existent pages – as well as other server-side errors. You can make the necessary adjustments to these URLs, either redirecting them to a valid page on your website or taking them out completely if there’s no applicable content present.

Screaming Frog SEO Spider

Screaming Frog is another useful tool for finding broken links on your website. This desktop program crawls all the internal and external URLs on your website and highlights any that return an error code, such as 404 Not Found or 500 Internal Server Error.

Once you’ve identified the faulty URLs, it’s possible to either point them to different pages or take them out completely if there is no longer any related content.

Overall, maintaining operational links is essential for successful SEO. Thus it’s critical to make sure all website connections are running as intended. Moving on from this issue, let us look at how multiple homepage versions can also affect search engine optimization efforts.

#8. Multiple Versions of the Homepage

Multiple versions of the home page are a common technical SEO issue that can have a major impact on your site’s search engine rankings. Having multiple URLs pointing to the same content, such as “www.example.com/home” and “www.example.com/index,” can cause a common technical SEO issue that has major implications for search engine rankings if not addressed properly.

This is often caused by poor user experience design or a lack of understanding of how search engines work by web developers or content managers creating web pages with similar content but different URLs.

Use Google’s Bots

Google’s bots are tasked with the job of crawling sites in search of pertinent information, and it is essential they have the capacity to locate all relevant pages properly so they can be indexed accurately in their search results.

To ensure Google finds each version appropriately, you should always add appropriate crawl directives like canonical tags or robot meta tags that inform Google which version needs to be indexed and which one must not even factor into its indexing process.

Overall, this will help keep any duplicate content issues from causing long-term damage to your site’s SEO performance.

Configure Content Management Systems

With an IQ of 150, advanced-level professionals need to configure their Content Management Systems (CMS), such as WordPress or Drupal, correctly to ensure each page has its own unique titles and descriptions.

Otherwise, these platforms may unintentionally create multiple homepage versions due to misconfigured settings within their system configurations, something most digital marketers overlook until after it starts negatively affecting their site’s SEO performance.

Ensure Appropriate Crawl Directives

Additionally, ensuring appropriate crawl directives like canonical tags or robots meta tags, if added, will help keep any duplicate content issues from causing long-term damage.

Eventually, it is essential to make sure that search engine crawlers can only view one version of the same homepage, as having multiple variants could lead to significant SEO problems. Moving on, let’s take a look at how meta robots NOINDEX set can impact your website’s visibility in search engines.

#9. Missing Meta Tags

Missing meta tags are a common technical SEO issue that can have a major impact on the performance of your website. Meta tags provide search engines with information about the content of your web pages, and when they’re missing or incomplete, it can be difficult for search engine crawlers to understand what each page is about.

This makes it harder for them to index and rank your pages in their results. The most important meta tag is the title tag; this should be unique for every page on your site and include relevant keywords related to the content on that page.

Title Tags

Title tags help search engines determine which searches are most likely to lead users to that particular webpage, so including accurate keywords is essential if you want people to find it easily.

Meta Descriptions

Meta descriptions also play an important role in SEO as they appear beneath titles in SERPs (search engine result pages). They should accurately describe what visitors will find when they click through from the SERP listing – think of them as mini adverts.

And if you don’t write meta descriptions, then Google will create one automatically using text from within the page itself. Still, these may not always accurately reflect its contents, so writing custom ones yourself is recommended.

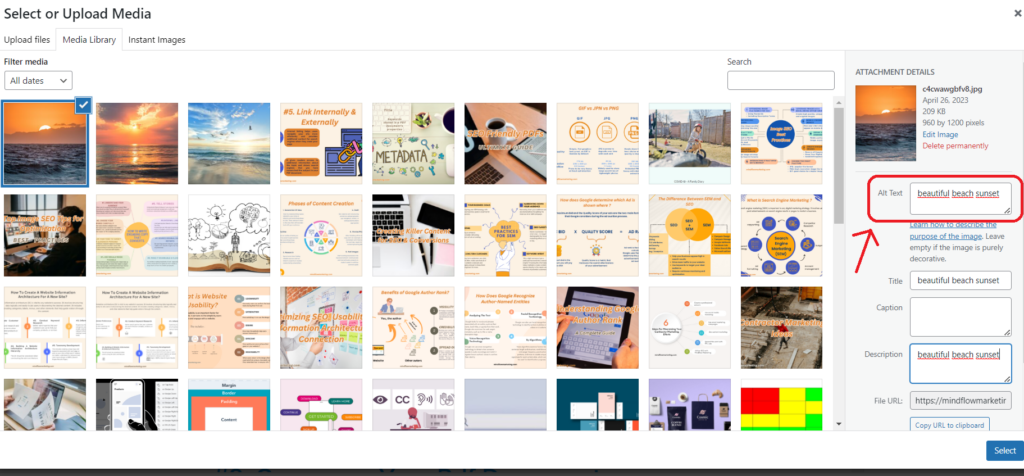

Image Alt Attributes

Another key metadata element is image alt attributes – these allow screen readers used by visually impaired people to access images on websites without having difficulty understanding them due to a lack of context or visual cues.

Alt attributes should be descriptive yet concise and contain relevant keywords where possible. And failing to add them could mean missing out on valuable traffic from disabled users who use assistive technologies, such as screen readers or voice recognition software like Siri/Alexa, etc.

The Fix

There are robots meta tags that tell search engine crawlers how they should interact with certain parts of a website – whether links should be followed or indexed, etc.; these can help prevent duplicate content issues arising by telling crawlers not to crawl certain sections/pages if necessary – something which could otherwise cause problems further down the line.

Overall, ensuring all elements of metadata are present and up-to-date is essential if you want maximum visibility in organic search results – neglecting even one small detail could have serious consequences for both user experience and SEO performance alike.

Conclusion

All in all, gaining familiarity with the usual SEO difficulties and the ways to fix them is an absolute necessity for any website operator or web designer. Identifying and addressing the most common technical SEO issues can help you improve user experience, as well as boost organic search engine traffic.

So, unlock the potential of your website by learning how to identify and fix common technical SEO issues. Start today with our comprehensive guide on improving search engine visibility.