Are you looking to take your website’s search engine optimization game to the next level? If so, then this is the ultimate guide for you. For those looking to take their SEO game to the next level, this guide will show how simple technical SEO can be with our comprehensive approach.

From understanding technical SEO basics to optimizing site architecture and improving user experience (UX), this guide will cover all essential topics for successful implementation. We’ll also provide tips on leveraging social media platforms for SEO and crafting quality content that ranks well in search engines.

Finally, we’ll discuss monitoring progress with regular audits – an important part of any effective technical SEO strategy. Dive into our definitive resource today and prepare to transform your website’s online visibility.

What is Technical SEO?

Technical SEO is an essential part of any comprehensive SEO strategy. It covers the technical aspects of a website that can affect its visibility in search engine results pages (SERPs).

Technical SEO ensures search engines understand, crawl, and index your website correctly. This includes optimizing page titles and meta descriptions, creating a sitemap, using structured data markup to help search engines better understand the content on your site, and more.

Why Is Technical SEO Important?

You may be tempted to disregard this aspect of SEO entirely; however, it is critical to your organic traffic. Even if your content is the most thorough, useful, and well-written, only some people will see it unless a search engine can crawl it.

It’s like a tree falling in the forest when no one hears it… Is there a sound? Your content will make no sense to search engines unless supported by a solid technical SEO foundation.

Understanding Technical SEO

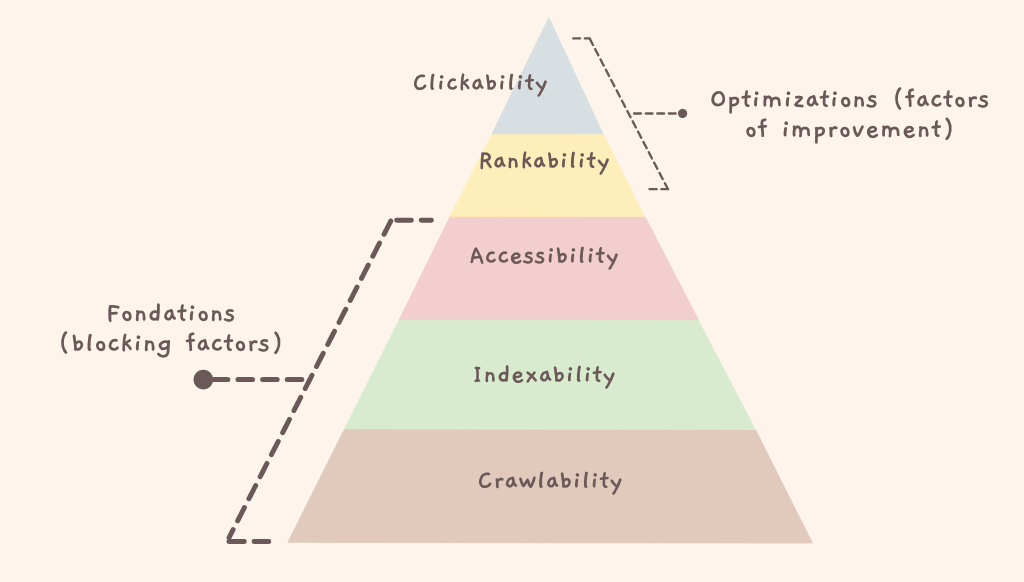

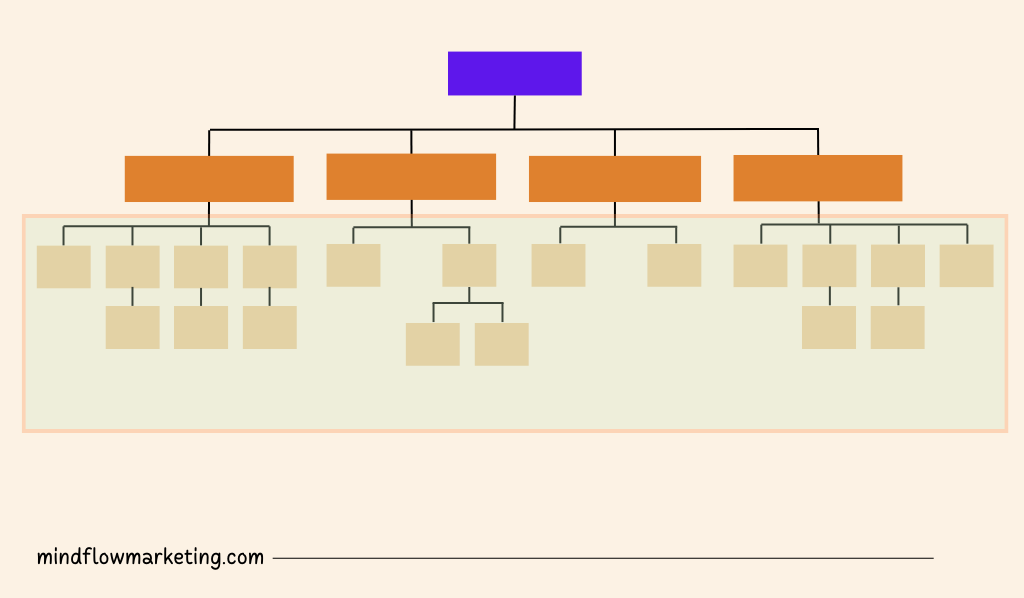

Technical SEO is a beast that is best broken down into manageable chunks. If you’re like me, you tackle big tasks in chunks and with checklists. Everything we’ve discussed so far can be classified into one of five categories, each with its own set of actionable items.

This beautiful graphic, reminiscent of Maslov’s Hierarchy of Needs but remixed for search engine optimization, best illustrates these five categories and their place in the technical SEO hierarchy. (Note that we will use the commonly used term “Rendering” instead of Accessibility.)

Optimizing Site Structure

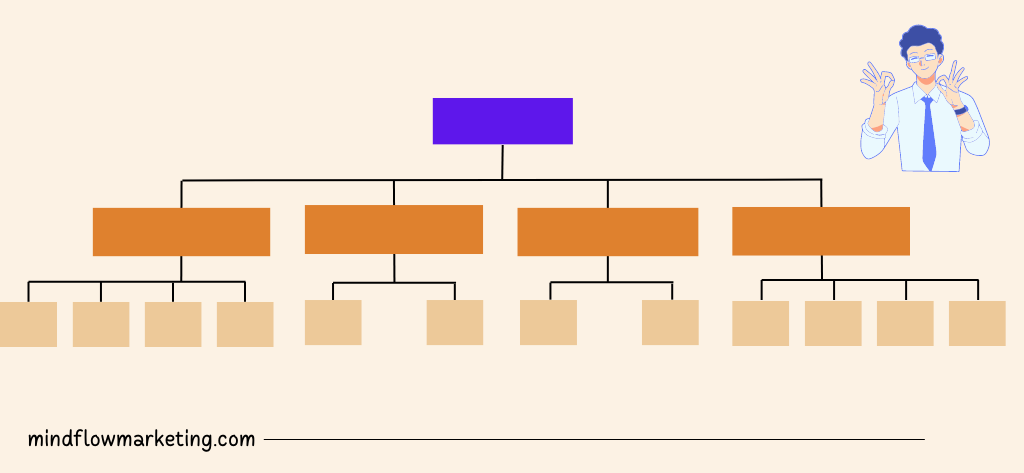

A well-structured website is essential for SEO success.

Flat Structures

Flat structures mean pages should be at most two clicks away from the homepage.

This makes it easier for search engine crawlers to find your content and index it quickly, making it easier for users to navigate your site.

Consistent URL Structure

URLs should be structured logically, reflecting the page’s hierarchy within the overall website structure.

For example, if you have a product category page with several subcategories, each page should have its unique URL, which follows on from the main category page’s URL – e.g., www.examplewebsiteproduct-categorysubcategory1 .

This helps search engines understand how pages relate to one another and also makes them easier to remember by users who may want to bookmark or share them later on down the line.

Breadcrumbs Navigation

Breadcrumb navigation allows users to easily trace their steps back up through categories they’ve visited while browsing your site, allowing them an easy route back home (or wherever else they need to go).

It also helps search engines better understand how different parts of your website fit together – especially useful when dealing with larger sites with lots of different sections and subsections!

In addition, sidebars can help make navigating large websites much simpler, too – think about adding links so visitors can jump between related topics without having to scroll back up whenever they want something new!

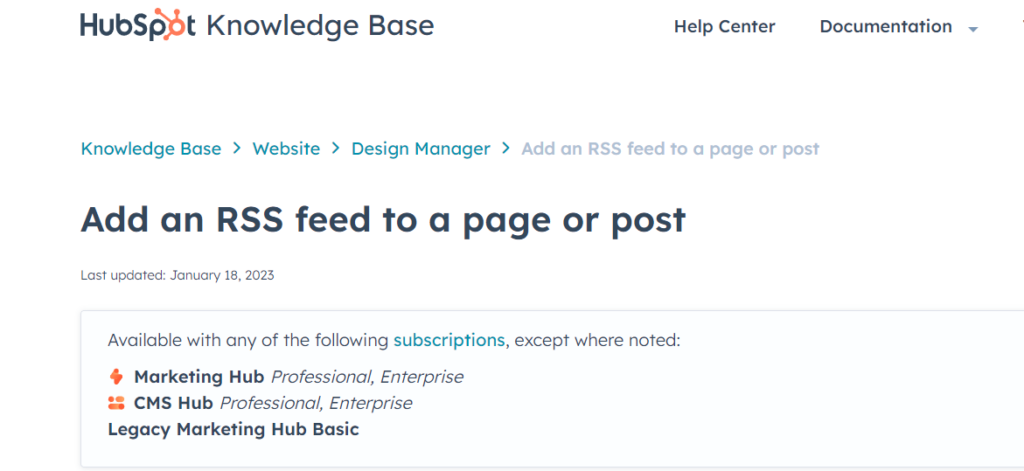

Here’s an example of hierarchy breadcrumbs from the HubSpot :

Crawling, Rendering and Indexing

Crawling, rendering, and indexing are essential components of technical SEO.

Spot Indexing Issues

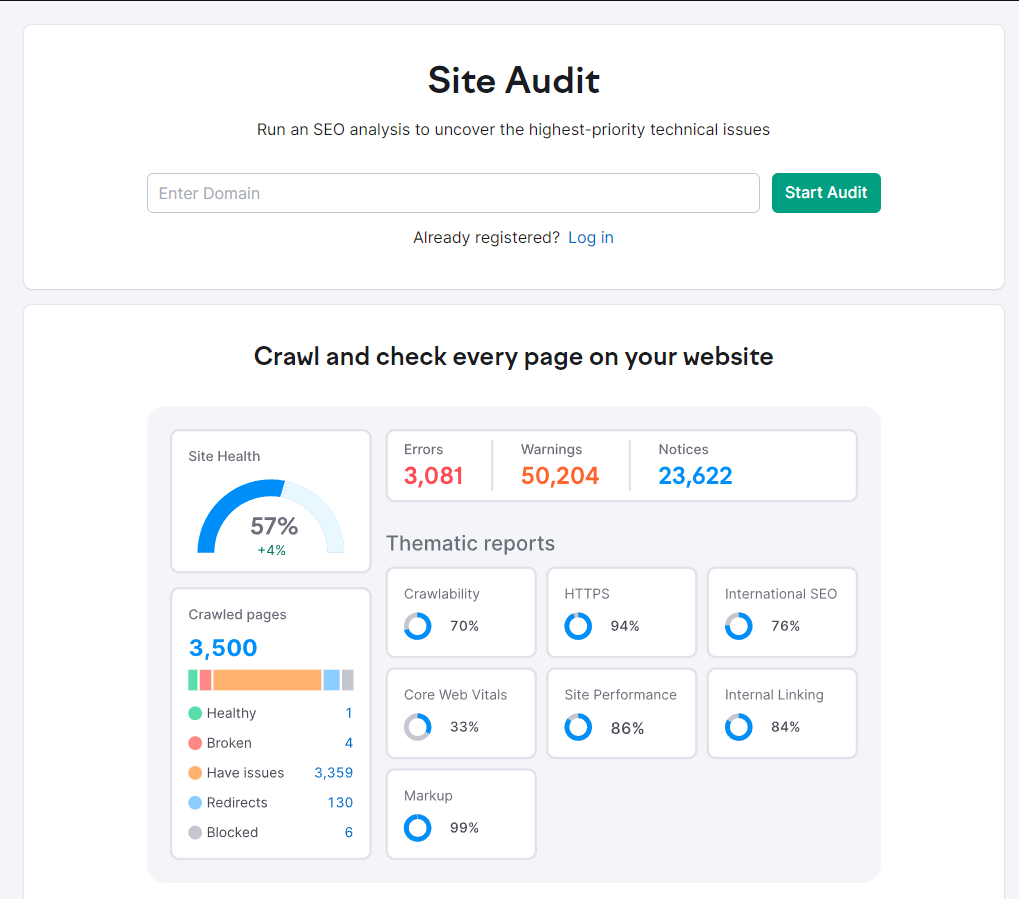

Spotting indexing issues is one way to ensure your website is being crawled, rendered and indexed correctly.

This can be done using tools such as Screaming Frog or Semrush Site Audit, which will show you any errors related to crawling or indexation that need addressing.

You should also check Google Search Console (GSC) regularly for coverage reports – these will tell you how many pages have been indexed by Googlebot over time, allowing you to spot any problems with crawling quickly and easily.

Internal Linking

Another important factor in ensuring your site gets crawled, rendered and indexed properly is internal linking between deep pages – this helps search engines find all of your content more easily so it can be included in their databases.

XML Sitemaps

XML sitemaps are also useful here; they provide a list of URLs on your site, making it easier for crawlers to discover new content without manually crawling every page. According to a Google representative, XML sitemaps are the “second most important source” for locating URLs.

GSC Inspect

GSC Inspect allows you to test individual URLs directly from within GSC itself – this lets you see exactly what information Google has about each URL before deciding whether further action needs to be taken.

Thin and Duplicate Content

Regarding technical SEO, thin and duplicate content can be a real issue.

Use SEO Audit Tool

An SEO audit tool is the best way to determine if you have any of these issues on your website.

It will crawl through your entire site and look for any instances of thin or duplicate content that may be present. It also provides detailed reports so you can easily identify the problems and act accordingly.

It is another great option for finding thin or duplicate content on your website. This tool crawls through your site, looking for pages with low word counts or similar title descriptions as other pages on the same domain.

It then provides recommendations about how to fix those issues quickly and efficiently.

Screaming Frog is another useful tool for identifying thin or duplicate website content.

This program crawls through every website page to check for words per page, title tags, meta descriptions, etc., allowing users to easily spot potential areas of concern before they become bigger problems.

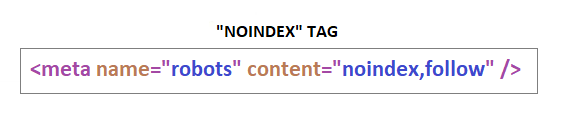

Noindexing Pages

Most websites will have pages with some duplicate content. That’s fine.

When those duplicate content pages are indexed, this becomes a problem. What is the solution? To those pages, add the “noindex” tag.

The noindex tag informs Google and other search engines that the page should not be indexed.

Use Canonical URLs

Implementing canonical URLs helps reduce thin or duplicate content across multiple domains connected to one business entity.

This is achieved by directing backlinks towards one specific URL instead of having each link point at its page, which could lead to confusion among search engine algorithms regarding which version should be indexed higher up in SERPs due to the availability of multiple versions online under different URLs.

Such ambiguity between what constitutes “original” versus “duplicate” material within certain contexts, depending on who’s making the judgement call, leads to lower rankings overall; thus, it is highly recommended that canonical URLs are used whenever possible for both technical SEO purposes and improved user experiences (UX) across various devices such as desktop computers, tablets and mobile phones.

Improving Page Speed

How long will it take a website visitor to load your website? Six seconds is a generous estimate. According to some data, increasing the page load time from one to five seconds increases the bounce rate by 90%. You don’t have a second to waste, so making your website load faster should be a top priority.

Reduce Page Size

Reducing page size is the first step: compressing images, minifying HTML, CSS and JavaScript files, and removing any unnecessary code or assets.

Content Delivery Networks

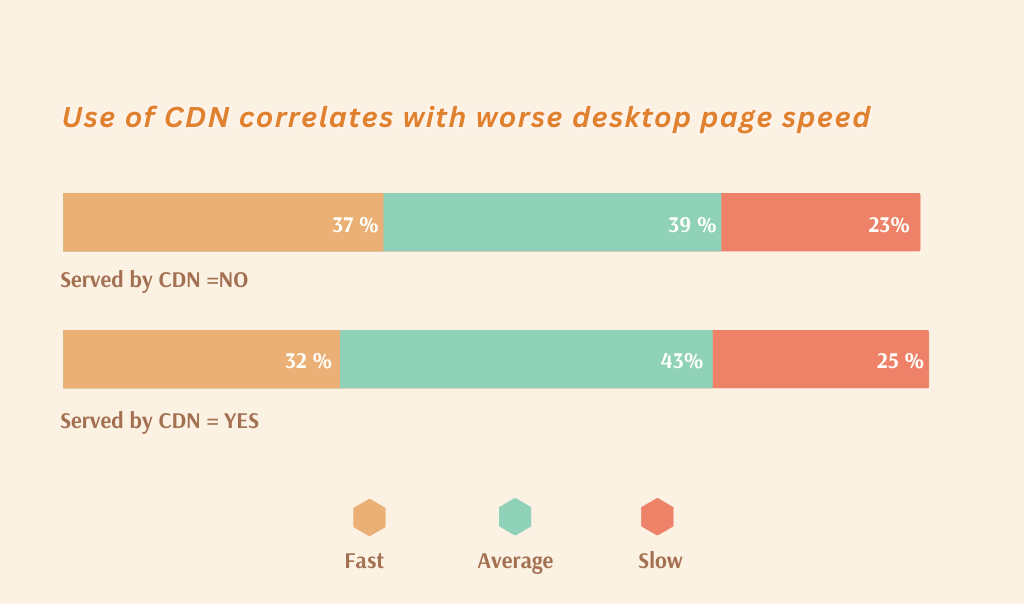

Content Delivery Networks (CDNs) can also help improve page speed by hosting content on multiple servers worldwide to deliver faster to users regardless of location.

Caching Static Resources

Caching static resources such as images and scripts can also reduce loading times significantly.

Lazy Loading

Lazy loading is another great way to reduce page weight – instead of loading all content at once when a user visits your site, only the content they need for that particular view will be loaded initially.

Minifying CSS

Minifying CSS can also help with page speed; this involves removing whitespace from stylesheets so that they’re smaller in file size without affecting functionality or aesthetics.

To assess the efficacy of these techniques in improving page speed, Webpagetest.org should be used to measure performance with and without CDN support enabled.

This will indicate how much faster a website could become if utilizing services such as Cloudflare or Amazon CloudFront.

Furthermore, third-party scripts should be eliminated where feasible since they often add extra bulk due to tracking pixels etc., resulting in slower load times. Thus, it is recommended that Google PageSpeed Insights tests are run before and after disabling any third-party scripts for comparison purposes.

Implementing Href Lang for International Websites

When it comes to international websites, implementing Href Lang is an essential part of technical SEO. This technique helps search engines understand the content on a website and serve up the most relevant version for users in different countries or regions.

The main purpose of using Href Lang tags is to ensure that search engine crawlers can accurately identify which language each page contains and its geographic target audience.

By doing this, you can ensure that your site’s content appears in the right places and gets served to the right people.

To implement Href Lang tags correctly, you must create a tag for each language region combination you want your website to be available in.

For example, if you have two versions of your site – one for English speakers in Canada and another for French speakers in France – then you would need two separate tags:

One with “en-ca” (for English) and another with “fr-fr” (for French). You must also specify these pages’ locations by including their URLs within the tag.

It’s important to note that while Href Lang tags are incredibly useful when optimizing international sites, they should not be used as a substitute for creating localized content tailored specifically towards different audiences worldwide.

Try adding unique elements such as local images or cultural references into your copy so that visitors from other countries will feel more at home when visiting your site!

Check Your SEO for Dead Links

Dead links can be a major issue for any website, as they not only detract from the user experience but also have a negative impact on SEO. To ensure that your site is free of dead links, it’s important to check them regularly.

Fortunately, several tools can help you do this quickly and easily.

Xenu Link Sleuth is one of the most popular tools for checking dead links. This tool scans websites for broken hyperlinks and displays them in an easy-to-read report format so you can identify which ones need to be fixed or removed.

It’s fast and efficient, idealising larger sites with hundreds or thousands of pages.

Another great option is Google Search Console (GSC). GSC allows users to view all 404 errors on their site and provides detailed information about each one, such as the URL path where the error occurred, how many times visitors accessed it, etc.

This makes it easier to pinpoint exactly which link needs attention so you can fix it immediately without wasting time searching your entire website manually.

Screaming Frog SEO Spider is another useful tool that helps identify broken links on websites large and small alike.

It crawls through every page of your site, looking for issues like redirect chains or missing image files that could lead to dead links being created over time if left unchecked.

Additionally, its “custom filter” feature allows users to create custom rules based on specific criteria, such as response codes or file types, to narrow their search results even further when needed.

These are just some of the many tools available today that make finding and fixing dead links much simpler than ever before – saving time and money!

Set Up Structured Data

Structured data is a powerful tool for SEO professionals. It helps search engines better understand the content of your website and can even give some pages Rich Snippets in SERPs (Search Engine Results Pages).

Structured data is coded you add to your HTML, giving more information about the page’s content. This extra context helps search engine crawlers make sense of what’s on the page and how it relates to other pages on your site.

For example, if you have an e-commerce store selling books, structured data can help Google understand that a certain product page contains book-related information such as title, author name, ISBN etc.

Adding this extra layer of detail to each product page with structured data markup gives search engines more “clues” about what kind of content they should be displayed when someone searches for related terms.

Adding structured data also makes it easier for webmasters to optimize their websites for specific keywords or phrases by providing additional context around those words or phrases.

For instance, if you want to target the phrase “best fiction books 2020”, then adding structured data markup around this phrase will tell Google that all of these products belong together under one umbrella topic – making them much easier to find in SERPs than without any additional context provided by structured data.

Validate Your Xml Site Maps

Validating your XML site maps is an important part of technical SEO. It helps ensure that all the pages on your website are properly indexed by search engines and can be easily found by users.

A well-structured XML sitemap provides a roadmap for search engine crawlers to find and index all the content on your website, making it easier for users to discover relevant information quickly.

Use XML Sitemap Validator

An XML sitemap validator such as Map Broker’s XML Sitemap Validator can help ensure your site map is up-to-date and accurate.

This tool will scan through each page in the map, checking for any errors or inconsistencies in structure or formatting.

If any issues are detected, they will be highlighted so you can address them before submitting the sitemap to search engines like Google or Bing.

When using this tool, it’s important to check your site map’s HTML and RSS versions, if available.

The HTML version should include all pages from your website, while the RSS version should only contain recently updated content, such as blog posts or news articles.

Check Warnings About Duplicate URLs

Pay attention to any warnings about duplicate URLs, which could indicate potential problems with canonicalization or redirects on those pages which need further investigation.

Check Broken Links

When validating an XML sitemap, it’s also essential to check whether any broken links are present in either version of the file – these could lead searchers astray if not addressed promptly!

Doing this manually would take hours, but luckily tools like Map Broker allow you to validate both versions simultaneously, so you don’t have to go through every link individually.

By regularly validating your XML site maps with a reliable tool like Map Broker’s, you’ll be able to keep track of changes made over time and ensure that all relevant content is correctly indexed by search engines – giving users a better experience when visiting your website!

FAQs About the Ultimate Guide to Technical Search Engine Optimization

Conclusion

By applying the strategies outlined in this comprehensive guide to technical SEO, you can increase your website’s visibility and position on major search engines. By optimizing site architecture, improving usability and UX design, leveraging social media platforms for SEO purposes, crafting quality content that ranks well in SERPs and monitoring progress with regular audits – you will be able to maximize organic traffic from targeted audiences while staying ahead of the competition.

Take your website to the next level and maximize its visibility with our ultimate technical search engine optimization guide. Our comprehensive solutions will help you improve site architecture, web usability, user experience (UX), and more!