As an SEO expert, you understand the significance of avoiding duplicate content on your website to maintain good search engine rankings.

This blog post will explore what constitutes duplicate content and why it can negatively impact your search engine rankings.

To identify and prevent issues related to duplicate content, this post will provide strategies for creating unique and valuable content to help you stay ahead of the competition.

Additionally, we’ll examine the penalties associated with having too much similar or scraped content across multiple pages or URLs.

By the end, this guide will give you a better grasp of why specific content is essential for SEO and how to guarantee that your site continues ahead in today’s competitive digital space.

More Resources :

Multiple Site Issues

Multiple site issues can be a tricky problem to tackle.

Web admins and SEO professionals must understand the implications of having multiple URLs associated with their websites, as it can impact user experience (UX) and search engine optimization (SEO).

When it comes to SEO, having multiple URLs associated with your website can lead to duplicate content issues ( What we have discussed down)

This is because search engines may index all of the different versions of a page, leading them to think that you are trying to manipulate rankings by creating multiple copies of the same content.

Solution: To avoid this issue, use canonical tags or 301 redirects so search engines know which version should be indexed.

Another potential issue related to multiple URLs is link dilution.

Suppose there are too many versions of your pages floating around online. In that case, they will not receive as much attention from other websites linking back to them – thus reducing their overall authority in the eyes of Google and other major search engines.

Solution: The best way to combat this is by consolidating all variations into one URL and using 301 redirects for links pointing at outdated pages or locations.

Finally, regarding UX design principles, having too many URLs associated with your website can create confusion among users who may end up clicking on several different links before finding what they need – if they ever find what they need!

A good rule of thumb here is:

Less is more; try to keep things simple by adding unnecessary navigation elements or additional pages just for show – keep everything streamlined and focused on delivering value quickly & efficiently.

Coming to duplicate content, let’s learn what it is in the first place.

More Resources :

What is Duplicate Content ?

Duplicate material is any data found on the web in multiple places. This can include exact copies of web pages, blog posts, or even text snippets. It can also have various versions of the same page, such as mobile and desktop. Duplicate content can challenge SEO, as search engines may need clarification on which page should be prioritized for keywords.

When duplicate content exists on different URLs, search engines may be unable to determine which URL should be indexed and ranked higher in their results pages. As a result, both pages could compete for ranking positions and dilute any SEO efforts made by website owners or marketers. This can decrease visibility in organic search results since only one page will be shown simultaneously, regardless of how many duplicate pages exist online.

In addition to competing for rankings, duplicate content can confuse users trying to find specific information from your website or a blog post but instead getting redirected to another similar page with the same information. This could negatively impact user experience (UX), resulting in visitors leaving your site without taking action or making purchases due to frustration over needing help to locate what they were looking for quickly and easily.

Duplicate content is a significant issue in SEO and can have negative consequences for your website, so it’s essential to understand the same content and how to avoid it. It is necessary to comprehend why duplicate content matters, so let’s delve into the particulars of this problem.

Why Does Duplicate Content Matter?

Duplicate content can be a severe website issue, affecting search engine rankings, traffic, and user experience. When search engines detect multiple versions of the same content, they may view it as spammy and penalize the website by lowering its search rankings. Additionally, duplicate content can dilute the authority of a website, making it less likely to rank highly in search results. In this context, website owners must understand why to duplicate content matters and take steps to avoid it.

#1. Duplicate Content Affects UX

Duplicate content can hurt user experience (UX). It confuses visitors and search engines, making it challenging to find the correct information or page they’re looking for quickly and easily. This leads to longer load times, higher bounce rates, and decreased engagement with your site overall.

Furthermore, suppose you have multiple versions of the same content indexed in Google. In that case, users may see outdated or irrelevant information when searching for something specific on your website – which could lead them to leave without taking any action. To avoid this problem, ensure all duplicate content is canonicalized correctly so that only one version is visible on search engine results pages (SERPs).

#2. Search Engines Struggle with Duplicate Content

Search engines like Google struggle with duplicate content because they don’t know which URL should be shown first in the SERPs for a particular query – leading to poor user experience.

This issue can be avoided by using rel=canonical tags, which tell search engines what version of the page should be used when indexing it into their database – thereby ensuring that users see relevant and up-to-date information every time they visit your website from an organic source such as a SERP listing or link shared via social media, etc.

Additionally, you can also use 301 redirects if needed; these will ensure that no matter how someone lands on your site – whether through typing in different URLs directly into their browser bar or clicking links from other websites – they will always land on the correct page each time without having to worry about being redirected elsewhere due to duplicated material being present somewhere else within your domain structure.

How Do Duplicate Content Issues Happen?

Duplicate content issues can occur on websites in several ways, often unintentionally. Content duplication can happen due to technical problems, such as the use of printer-friendly versions of pages, pagination, or session IDs.

It can also occur when content is intentionally duplicated across multiple pages or websites. Websites may sometimes use scraped or copied content from other sources, which can result in serious legal consequences. Understanding how duplicate content issues happen is crucial in preventing them from affecting website performance, search engine rankings, and user experience.

Multiple URL Parameters : (As discussed above)

URL parameters like click tracking and some analytics code can create duplicate content issues. This is due to the parameters themselves or even the order in which they appear in the URL.

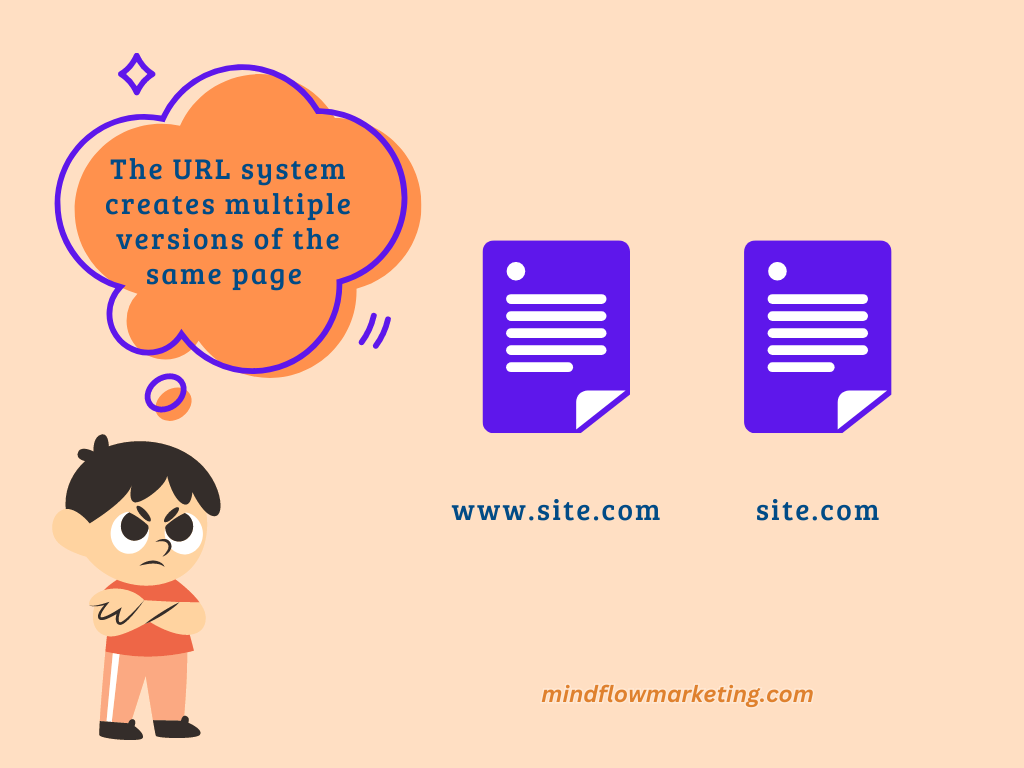

For example, suppose a website has different versions of URLs like “www.site.com” and “site.com,” with identical content living at both locations. In that case, this will result in duplicates being indexed by search engines – causing a duplicate content issue.

Session IDs

Another common source of duplicate content is session IDs assigned to users who visit a website stored within its URL string. When users access pages with these unique identifiers attached to them, it creates multiple versions of those same pages – resulting in duplication problems for search engine crawlers trying to index your site correctly.

Printer-Friendly Versions

Printer-friendly versions of webpages can also lead to duplicate content issues when multiple versions are indexed by search engines instead of just one version being preferred over another (for example, www vs. non-www).

To avoid this problem, it’s best practice not to include printer-friendly links on every page or ensure that no index tags are added so that only one version gets crawled and indexed properly by search engines like Google or Bing.

Product Information Pages

Ecommerce sites may be particularly vulnerable to product information duplication since many websites use manufacturer descriptions for their products – leading all these sites to have identical copies across them, which causes significant SEO problems down the line. It’s also vital that no index tags are used on these pages or canonicalization techniques are employed so that only one version gets picked up as authoritative by search engine crawlers and algorithms alike.

When manually comparing texts between different web pages, one can copy and paste them into Microsoft Word documents side-by-side to detect similarities. Furthermore, using Google’s Advanced Search feature can also be beneficial when attempting to identify potential cases of plagiarism; however, it is essential to recognize minor differences in wording during this process.

How to Identify Duplicate Content

Duplicate content is a significant issue for search engine optimization (SEO) and website usability. It can lead to poor user experience (UX), lower rankings, and decreased traffic.

Fortunately, there are several tools available that can help identify duplicate content on your website or blog.

The first step in identifying duplicate content is to understand what it is. Duplicate content occurs when two or more web pages have the same or similar text, titles, meta descriptions, headings, etc., but with different URLs.

This could be intentional or unintentional; addressing it as soon as possible is essential because Google penalizes websites for having too much duplicate content on their pages.

One of the most popular tools used to identify duplicate content is Copyscape Premium which allows you to scan entire websites for plagiarism and duplicated text from other sources online.

Another great tool is Siteliner which will analyze your site’s pages and highlight any duplication across them so you can take action quickly if needed.

Finally, Screaming Frog SEO Spider crawls through every page of your website, looking for potential issues such as broken links and redirects as well as highlighting any areas where there may be duplication between pages within the same domain name – this makes it easy to spot problems before they become an issue with search engines like Google or Bing!

For those who want a more comprehensive solution than these individual tools offer, then consider using a service like Lumar ( DeepCrawl), which offers deep-level analysis, including checking for canonicalization errors (where multiple versions of the same page exist) along with providing detailed reports about how each carrier performs in terms of SEO metrics like keyword density & backlinks quality quantity, etc.

In addition to these automated solutions, there are also manual methods that one can use, such as manually comparing texts between different webpages by copying & pasting into Microsoft Word documents side-by-side – this will allow you quickly see if there are any similarities between them without needing to additional software tools!

How to Avoid Duplicate Content Issues?

Duplicate content can be a significant obstacle to achieving successful SEO results and may lead to decreased visibility for your website. By ensuring that no two pages have the same or similar content, search engines can better identify which page should be indexed, and users can more easily find relevant information. It also makes it harder for users to find relevant information on your site. To prevent duplicate content issues, it is vital to rephrase and optimize the website’s content for search engine indexing.

First, create unique and original content for each page of your website. Generate original material for each website page, including text, visuals, videos, and other media – don’t simply copy from somewhere else. Additionally, use canonical tags to indicate which page version should be indexed by search engines if multiple versions exist with slightly different URLs.

Second, if you must use duplicate content from an external source, such as another website or blog post, ensure it is properly attributed and linked back to its source. This will help ensure that users and search engines know where the original material came from and can easily access additional information.

Thirdly, if you have multiple websites covering similar topics or targeting different audiences, consider consolidating them into one main site instead of having various versions with identical or near-identical content across domains. Consolidation allows users to quickly find what they need without being confused by too many options while helping search engine crawlers identify which version is most relevant based on their algorithms and user signals like click-through rates (CTRs).

Google Search Console’s parameter handling tool can be utilized to mitigate duplication issues caused by UTM codes and session IDs. This allows us to define how these parameters are treated during indexing, ensuring that our crawl budget is allocated more consistently. This will lead to higher rankings and improved visibility for our web pages on SERPs.l

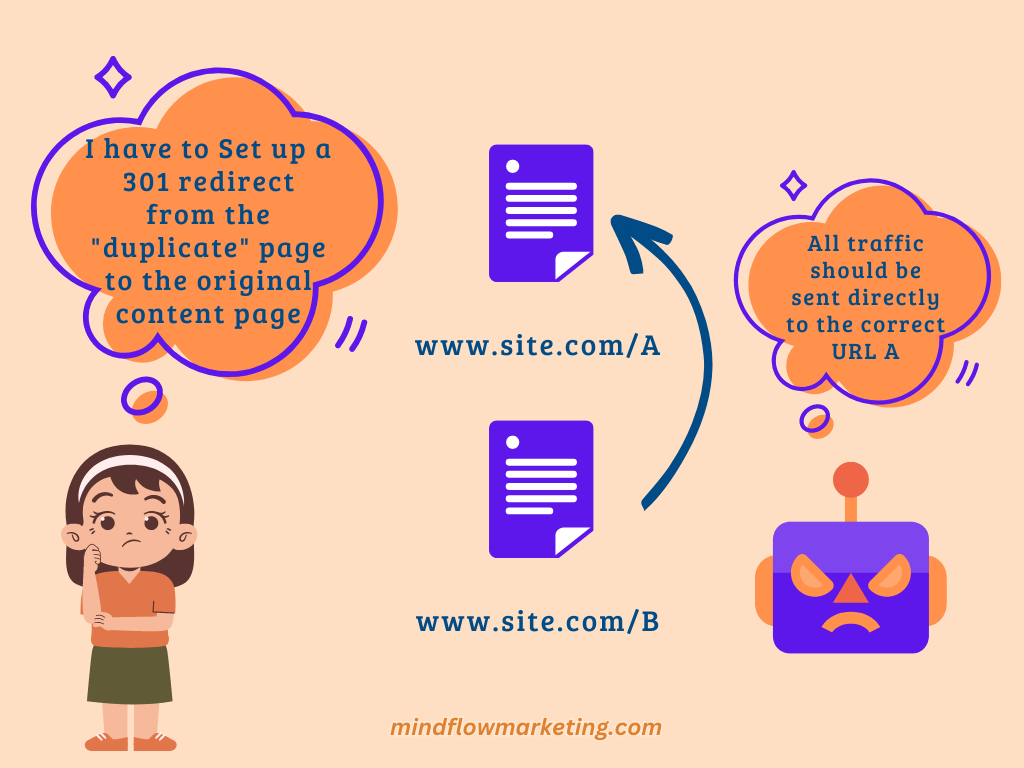

Using a 301 Redirect

One of the best ways to combat duplicate content is to set up a 301 redirect from the “duplicate” page to the original content page. This tells search engines that all traffic should be sent directly to the correct URL, preventing confusion and eliminating competition between pages with similar content.

For example, if you have two versions of your home page (www.example.comhome and www.example.comindex), you can use a 301 redirect so that only one version will appear in search engine results pages (SERPs). This ensures that users are always taken directly to the right place when they click on your link, improving their overall experience with your site and helping it rank better in SERPs simultaneously.

The rel=canonical Attribute

Another option for dealing with duplicate content is to use the rel=canonical attribute in the HTML head of each duplicate version of a page, with the “URL OF ORIGINAL PAGE” portion replaced by a link to its original (canonical) page.

This passes roughly as much ranking power as it does use a 301 redirect but requires less development time since it’s implemented at an individual page rather than the server level. By adding this tag, we ensure that all metrics, such as links or popularity signals, are credited toward our desired URL instead of being split across multiple URLs, leading them to compete against each other for rankings, thus diluting their strength individually.

Google Search Console Parameter Handling Tool

In addition to using either redirects or canonical tags, we can also make use of Google Search Console’s parameter handling tool, which allows us to define how parameters like UTM codes or session IDs are handled by crawlers while indexing our website, thereby reducing duplication issues due to these parameters appearing differently in different places without actually changing anything about what is seen by visitors on our website itself With this tool we can tell crawlers whether specific parameters should be ignored entirely or kept intact during crawling process ensuring more consistent crawl budget allocation for our webpages leading higher rankings eventually and improved visibility for them over SERP’s.

Designing a user experience that is pleasing and efficient should be the primary focus when creating any digital product or website. Issues with UX can occur from various causes, such as unclear layouts, intricate menus, no feedback after completing tasks, and discrepancies in styling between pages on the same domain name. These issues can lead to customers being deterred if not handled correctly.

Meaning of Multiple Site Issues in Duplicate Content

Duplicate content is a significant issue regarding search engine optimization (SEO). It can lead to decreased rankings, reduced traffic, and even penalties from Google.

The term “duplicate content” refers to any text or images that appear on multiple web pages or websites. This includes pages with the same title tags, meta descriptions, headings, and body copy.

Multiple Site Issues

When duplicate content appears across multiple sites, it can be difficult for search engines to determine which page version should be ranked higher in the SERPs (search engine results pages).

This is especially true if several versions of the same page exist on different domains.

In this case, Google may not know which version of the page should rank higher in its index. As a result, none of them will only show up in the SERPs at a time.

URL Parameters

Another issue related to duplicate content is URL parameters.

These are strings of characters added onto URLs that tell search engines how to interpret some aspects of a webpage, such as language settings or sorting options like “sort by price” or “sort by date,” etc.

If these parameters aren’t correctly configured, then they can cause issues with duplicate content because each parameter creates a unique URL even though all other aspects remain identical between them – resulting in multiple versions of essentially the same page being indexed by search engines leading to confusion over which one should rank higher than others.

Content Syndication

Content syndication occurs when you republish your original content elsewhere online, such as through RSS feeds or social media channels like Twitter and Facebook.

While this can help increase exposure for your website, it also has potential SEO implications due to duplicate content issues since now there are two copies of your original article appearing online – one hosted on your domain and another hosted somewhere else entirely – causing confusion among search engines over which version should be ranked higher in their index thus potentially reducing visibility for both versions altogether if not managed correctly.

Canonicalization

The best way to deal with these types of situations is through canonicalization, where you specify which version(s)of an article you want to be indexed by adding rel=canonical tags into each page’s HTML code so that search engines know exactly what version(s)should take precedence over others whenever someone searches for specific terms related those article pages.

Doing this helps reduce any potential negative impacts caused by duplicate content while still allowing you to reap some benefits from having multiple copies out there without running afoul of Google’s algorithms too much!

Best Practices To Avoid duplicate content and multiple site issues

Best practices for avoiding duplicate content and multiple site issues involve understanding the importance of closely monitoring indexed pages, redirects, and similar content. Awareness of the same content appearing on different URLs is essential, as this can lead to search engine penalties. To prevent search engine penalties, it is recommended to use 301 redirects for directing all traffic toward the correct page.

It’s also essential to use the canonical tag whenever applicable so that Google knows which URL version should appear in its index. This helps avoid confusion between different page versions with similar or identical content. Tools such as Siteliner can also help identify any potential duplicate content issues before they become problematic for your website’s ranking in search engine results pages (SERPs).

Finally, it is recommended to refrain from indexing WordPress tag or category pages since these often contain very little unique information and may even feature duplicated posts from other site areas, potentially damaging your SEO efforts if left unchecked. By taking these steps, you can ensure that your website is optimized correctly and will be accepted due to any duplicate or multiple site issues in the future.

Duplicate content & multiple site issues are complex topics that require understanding the underlying technical aspects. Nevertheless, with some basic tactics and good practices, you can guarantee your site is optimized for search engine visibility while evading any difficulties connected to multiple sites or duplicate content. By adhering to these principles, you can leverage the advantages of improved web traffic and higher SERP rankings.

More Resources :

Frequently Asked Questions

Conclusion

In conclusion, duplicate content can seriously affect websites’ search engine rankings, traffic, and user experience. It can occur in various ways, including technical issues and intentional duplication. However, there are ways to fix duplicate content issues, such as consolidating pages, using canonical tags, and avoiding scraped or copied content. Website owners must understand the consequences of the same content and take appropriate steps to prevent it from harming their website’s performance. By doing so, they can improve their website’s search engine rankings, traffic, and user experience.